Big Data Analysis

special focus on user experienceSpectra Offers

A full range of big data services, from consulting and strategy definition to infrastructure maintenance and support, enabling our clients to get vital insights from previously untapped data assets. We leverage advanced big data and business intelligence tools to help clients extract actionable insights from diverse data sets generated in real time and at a large scale. We enable organizations to consolidate massive volumes of structured, semi-structured, and unstructured data coming from different sources into a holistic environment that can be used for modelling and predicting new market opportunities.

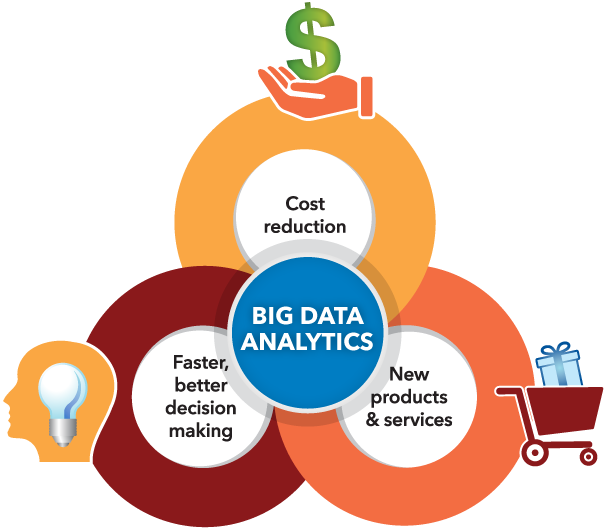

What is Big Data Analytics?

Data analytics is plainly defined as collecting data from social platforms to assist or guide you in creating and executing marketing strategies. This procedure starts by prioritizing business objectives. The following stage is shaping key performance indicators (KPIs). You can further measure how social media influences into meeting your business objectives. From here on, you can keep going ahead on the same track or fine-tune the approach to achieve your defined business goals.

Why is Big Data so important?

Characteristics of Big Data

The sheer scale of the information processed helps define big data systems. These datasets can be orders of magnitude larger than traditional datasets, which demands more thought at each stage of the processing and storage life cycle.

Often, because the work requirements exceed the capabilities of a single computer, this becomes a challenge of pooling, allocating, and coordinating resources from groups of computers. Cluster management and algorithms capable of breaking tasks into smaller pieces become increasingly important.

Another way in which big data differs significantly from other data systems is the speed that information moves through the system. Data is frequently flowing into the system from multiple sources and is often expected to be processed in real time to gain insights and update the current understanding of the system.

This focus on near instant feedback has driven many big data practitioners away from a batch-oriented approach and closer to a real-time streaming system. Data is constantly being added, massaged, processed, and analyzed in order to keep up with the influx of new information and to surface valuable information early when it is most relevant. These ideas require robust systems with highly available components to guard against failures along the data pipeline.

Big data problems are often unique because of the wide range of both the sources being processed and their relative quality.

Data can be ingested from internal systems like application and server logs, from social media feeds and other external APIs, from physical device sensors, and from other providers. Big data seeks to handle potentially useful data regardless of where it’s coming from by consolidating all information into a single system. The formats and types of media can vary significantly as well. Rich media like images, video files, and audio recordings are ingested alongside text files, structured logs, etc.

Other Characteristics

The variety of sources and the complexity of the processing can lead to challenges in evaluating the quality of the data (and consequently, the quality of the resulting analysis)

Variation in the data leads to wide variation in quality. Additional resources may be needed to identify, process, or filter low quality data to make it more useful.

The ultimate challenge of big data is delivering value. Sometimes, the systems and processes in place are complex enough that using the data and extracting actual value can become difficult.

Use cases for big data analytics

Improve customer integrations

Aggregate structured, semi- and unstructured data from touch points your customer has with the company to gain a 360-degree view of your customer’s behavior and motivations for improved tailored marketing. Data sources can include social media, sensors, mobile devices, sentiment and call log data.

Detect and mitigate fraud

Monitor transactions in real time, proactively recognizing those abnormal patterns and behaviors indicating fraudulent activity. Using the power of big data along with predictive/prescriptive analytics and comparison of historical and transactional data helps companies predict and mitigate fraud.

Drive supply chain efficiencies

Gather and analyze big data to determine how products are reaching their destination, identifying inefficiencies and where costs and time can be saved. Sensors, logs and transactional data can help track critical information from the warehouse to the destination.